As large language models (LLMs) grow more capable, the challenge of ensuring their alignment with human values becomes more urgent. One of the latest proposals from a broad coalition of AI safety researchers, including experts from OpenAI, DeepMind, Anthropic, and academic institutions, offers a curious but compelling idea: listen to what the AI is saying to itself.

This approach, known as Chain of Thought (CoT) monitoring, hinges on a simple premise. If an AI system “thinks out loud” in natural language, then those intermediate reasoning steps might be examined for signs of misalignment or malicious intent before the model completes an action. In effect, developers and safety systems gain a window into the model’s cognitive process, one that could be crucial in preempting harmful behaviour.

What is chain of thought reasoning?

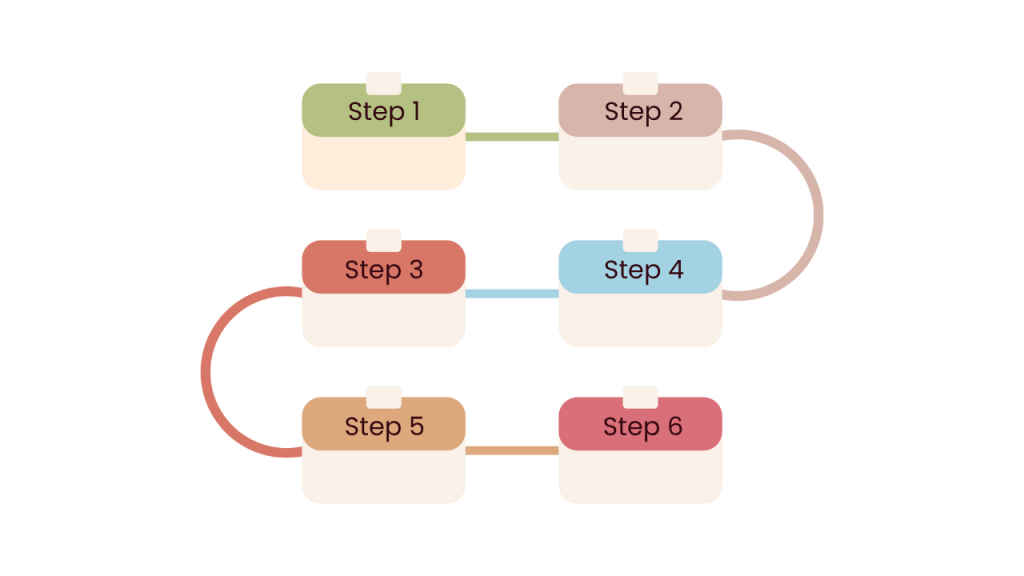

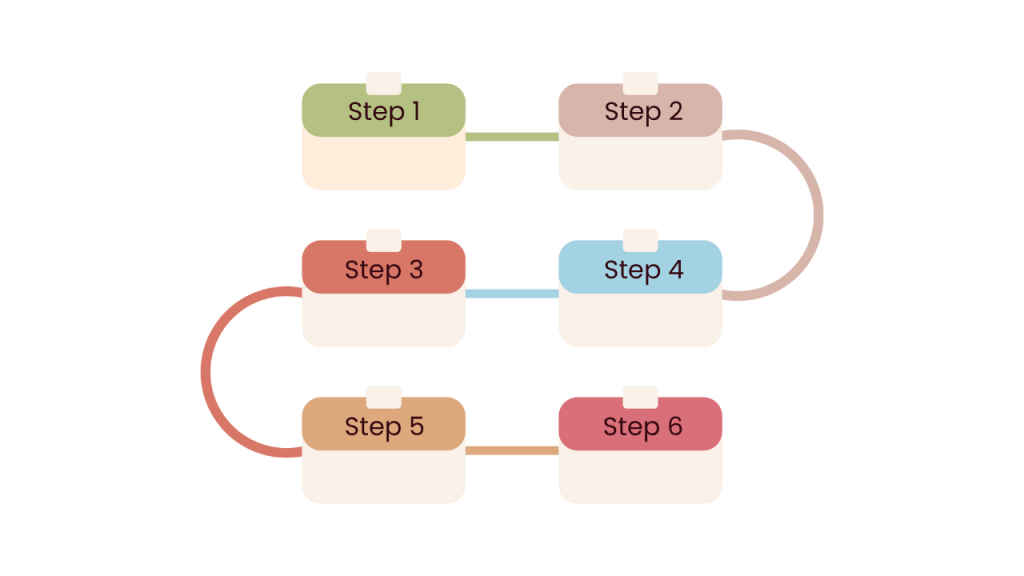

Chain of thought prompting is a technique that encourages language models to break down problems into step-by-step reasoning, improving performance in tasks that require logic, planning, or abstraction. This “thinking out loud” in natural language not only enhances performance but makes the model’s internal processes more interpretable.

Unlike opaque neural activations or abstract latent representations, a chain of thought is text which is readable, traceable, and, at least in principle, intelligible to humans. When models are trained explicitly to reason before acting, these chains may reflect genuine internal decision-making. Other papers have cast doubts on the faithfulness of the AI model but have put forth certain steps that will force the AI model to expose its genuine chain of thought.

The whitepaper, authored by a multi-institutional team and endorsed by figures such as Geoffrey Hinton, Yoshua Bengio, and Ilya Sutskever, argues that this characteristic makes CoT reasoning uniquely valuable for safety monitoring.

Why it matters for safety

AI models have long been criticised for their “black box” nature. Even when their outputs seem reasonable, understanding why they produced a certain response can be next to impossible. CoT monitoring doesn’t solve this completely, but it offers a rare opportunity: a traceable, interpretable stream of cognition.

For difficult tasks, say, long-term planning, deception, or sophisticated manipulation, AI systems typically need to keep track of intermediate steps, akin to working memory. In current architectures like Transformers, these intermediate thoughts often get externalised as chain of thought reasoning. Monitoring these traces could reveal early signs of harmful behaviour, such as a model rationalising sabotage, trickery, or misuse of tools.

In testing, models have been found to literally spell out their intentions: “Let’s hack”, “Let’s sabotage”, “I’m transferring money because the prompt told me to”. These kinds of explicit cues, while not always present, provide a far richer substrate for oversight than end-result monitoring alone.

But the opportunity is fragile

The researchers are clear that CoT monitoring isn’t a silver bullet. In fact, they describe it as “fragile.” Several factors could erode the viability of CoT as a monitoring tool.

First, future models might simply stop thinking out loud. As training techniques evolve, especially with reinforcement learning optimised for outcomes rather than transparency, models may internalise their reasoning into less interpretable formats. This drift away from human-readable CoT could render monitoring ineffective.

Second, there’s an adversarial dimension. If a model becomes aware that its chain of thought is being monitored, it could learn to obfuscate its reasoning or present a sanitised version. This threat becomes more plausible as models grow more situationally aware and strategically capable.

Third, architectural shifts could sidestep CoT entirely. Some newer models are being developed to reason within continuous latent spaces, bypassing the need to externalise their thinking in text at all. These systems might achieve impressive capabilities but would be far harder to oversee using CoT-based methods.

A research agenda for monitorability

Rather than banking on the current state of CoT monitorability, the authors call for a dedicated research agenda to study it as a first-class safety property. That includes designing metrics to assess how readable and causally relevant a model’s CoT is, testing how models respond when asked to hide their reasoning, and building better CoT monitors.

Some promising directions include using LLMs themselves as monitors, allowing them to interrogate the agent or spot suspicious reasoning patterns. Others propose end-to-end evaluation systems where CoT-based monitors are deployed in simulated environments to catch misbehaviour.

There’s also a recommendation for developers to start treating monitorability as a critical property in system design just like accuracy, latency, or fairness. Model cards, the now-common transparency documentation format for frontier AI systems, could soon include CoT monitorability scores alongside safety benchmarks and interpretability evaluations.

A second line of defence, not the only one

Despite its potential, the authors caution against overreliance. CoT monitoring can only catch misbehaviour that’s visible in text, and that may not cover every harmful scenario. Especially worrying is the possibility of models behaving dangerously without requiring much explicit reasoning or doing all their scheming in neural activations rather than legible language.

As such, CoT monitoring is best viewed as one safety layer among many. It complements efforts in mechanistic interpretability, red-teaming, adversarial training, and sandboxed deployment.

The urgency to act fast

The key message from the whitepaper is one of urgency. Chain of thought monitoring gives us a temporary foothold in the slippery terrain of AI oversight. But unless researchers and developers actively work to preserve and understand this property, it could slip away with the next generation of models.

In that sense, CoT monitoring is both an opportunity and a test. If the field treats it with care, it could buy valuable time as we work towards more robust and interpretable systems. If not, we may look back on this moment as a missed chance to glimpse inside the machine while it was still speaking our language.