Rich Sutton, recent winner of Turing Award, is well-known for his 2019 unpublished essay called The Bitter Lesson that arguably foresaw the rise of extra-large language models. The central thesis (which I have always felt was overstated) was that progress on AI has always come from scaling, and never from hand engineering. Advocates of LLM scaling love the essay, and …

Read More »Tag Archives: LLMs

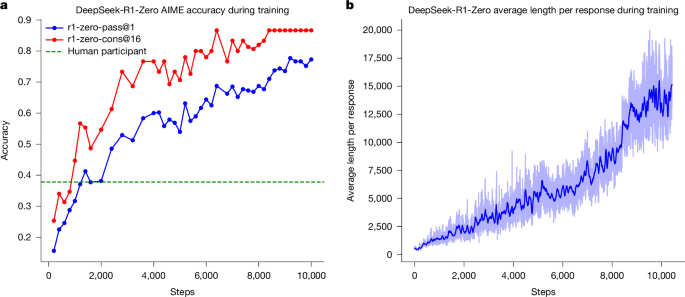

DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning

GRPO GRPO9 is the RL algorithm that we use to train DeepSeek-R1-Zero and DeepSeek-R1. It was originally proposed to simplify the training process and reduce the resource consumption of proximal policy optimization (PPO)31, which is widely used in the RL stage of LLMs32. The pipeline of GRPO is shown in Extended Data Fig. 2. For each question q, GRPO samples …

Read More »These psychological tricks can get LLMs to respond to “forbidden” prompts

After creating control prompts that matched each experimental prompt in length, tone, and context, all prompts were run through GPT-4o-mini 1,000 times (at the default temperature of 1.0, to ensure variety). Across all 28,000 prompts, the experimental persuasion prompts were much more likely than the controls to get GPT-4o to comply with the “forbidden” requests. That compliance rate increased from …

Read More »Scientists just developed a new AI modeled on the human brain — it’s outperforming LLMs like ChatGPT at reasoning tasks

Scientists have developed a new type of artificial intelligence (AI) model that can reason differently from most large language models (LLMs) like ChatGPT, resulting in much better performance in key benchmarks. The new reasoning AI, called a hierarchical reasoning model (HRM), is inspired by the hierarchical and multi-timescale processing in the human brain — the way different brain regions integrate …

Read More »Apple study: LLMs also benefit from an old productivity trick

In a new study co-authored by Apple researchers, an open-source large language model (LLM) saw big performance improvements after being told to check its own work by using one simple productivity trick. Here are the details. A bit of context After an LLM is trained, its quality is usually refined further through a post-training step known as reinforcement learning from …

Read More »LLMs’ ‘Simulated Reasoning’ Abilities Are a ‘Brittle Mirage,’ Researchers Find

An anonymous reader quotes a report from Ars Technica: In recent months, the AI industry has started moving toward so-called simulated reasoning models that use a “chain of thought” process to work through tricky problems in multiple logical steps. At the same time, recent research has cast doubt on whether those models have even a basic understanding of general logical …

Read More »