Google appears to be accelerating preparations for a new wave of AI features, as recent backend changes suggest that the Deep Think model has now been activated behind the scenes, though it remains hidden in the user interface. Early access tests show that Deep Think is functional and capable of returning responses, albeit with longer processing times compared to previous models—handling 10 prompts reportedly takes around five minutes. Despite the increased wait, the output quality appears to match or exceed the performance of the Kingfall model, previously spotted on AI Studio. This points to Deep Think potentially becoming the next major advancement in Google’s AI portfolio.

BREAKING 🚨: Google is preparing to release Deep Think on Gemini in the coming weeks and working on a new Agent Mode!

Deep Think on Gemini performs very close to the leaked “Kingfall” model.

What’s Agent Mode? Check below 👀 pic.twitter.com/kuppTgkjXA

— TestingCatalog News 🗞 (@testingcatalog) July 10, 2025

For those following the evolution of Google’s models, this development also sheds light on previous speculation about Gemini 3 and whether it was synonymous with Kingfall. With Deep Think now positioned for release, it seems more likely that Kingfall was Deep Think, in fact. Sources suggest a public Deep Think rollout could happen as early as next week.

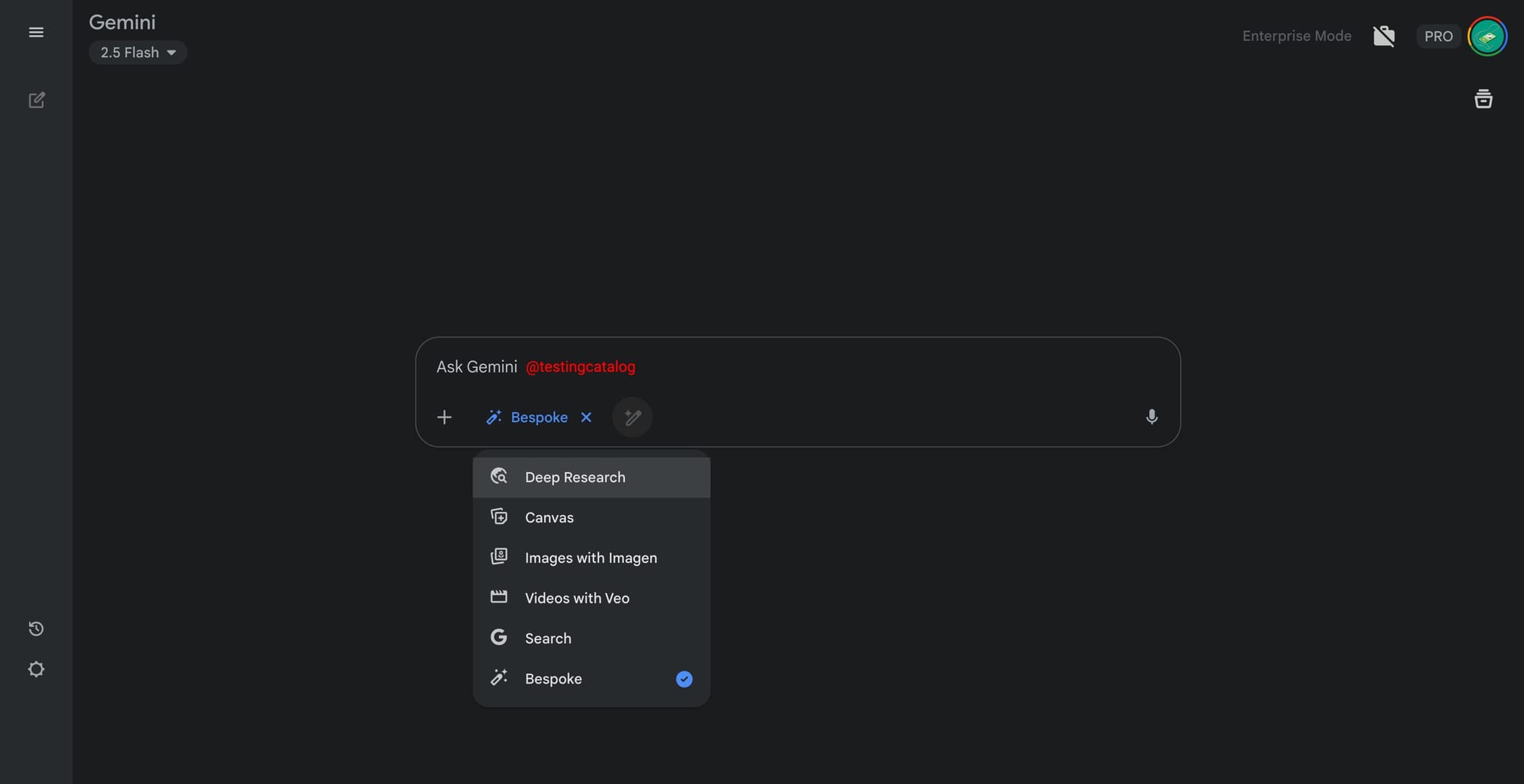

In addition to Deep Think, new features have surfaced within Gemini’s toolbox. One is a tool named Bespoke, which lacks a detailed description but hints at providing a personalized experience, possibly using user history or adapting output to individual preferences. There are also rumors this could relate to a storybook feature, although that seems less likely given the current placement of Bespoke in the UI.

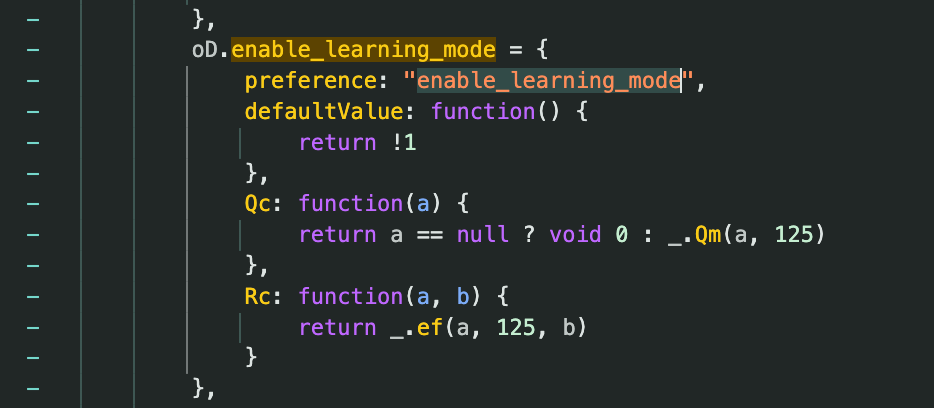

A separate Learning Mode setting has appeared, speculated to be aimed at students, perhaps offering study assistance similar to features found in ChatGPT’s Study Together. Details are still speculative, with no confirmed timeline for release.

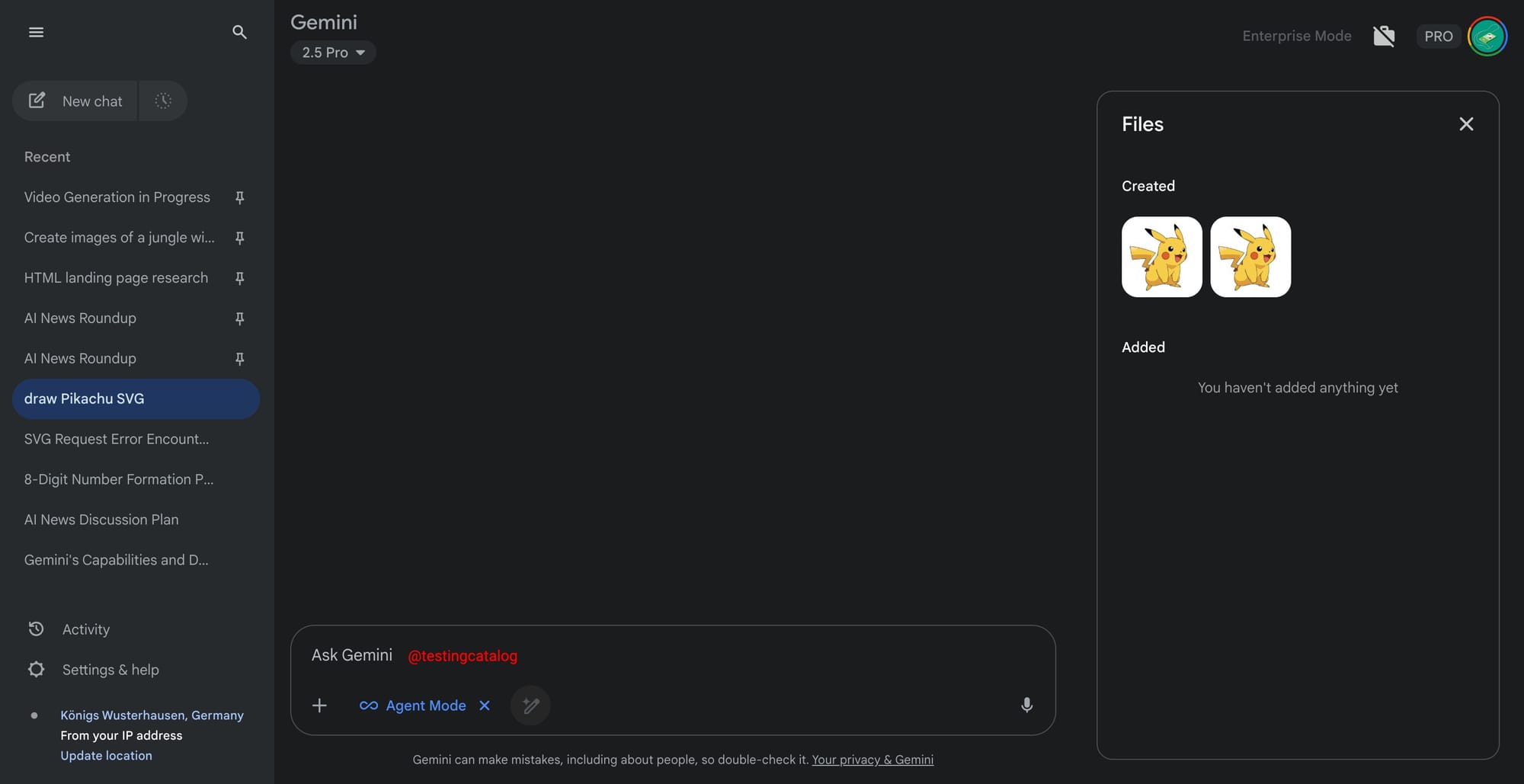

Another notable discovery is Agent Mode, indicated by an infinity symbol and described as enabling “Autonomous Exploration, Planning and Execution.” While specifics are unclear, this could involve integrating MCPs or leveraging Google’s A2A agent framework, allowing the model to handle complex tasks over extended periods autonomously.

Images generated by Gemini in Agent Mode will potentially go into a dedicated folder. Does it mean that the Gemini Agent will be able to operate with file storage to complete its tasks? If yes, it might become a very big deal!

These developments reflect Google’s ongoing strategy to expand the utility and flexibility of Gemini, with a clear focus on personalized and autonomous AI systems. TestingCatalog will continue monitoring for official updates, particularly as Deep Think’s release is likely imminent, while other features like Bespoke, Learning Mode, and Agent Mode remain under wraps for now.