OpenAI has taken a decisive turn in how its AI assistant, ChatGPT, responds to users. As of August 2025, the chatbot will no longer offer direct answers to questions involving emotional distress, mental health, or high-stakes personal decisions—reflecting a shift in how the company sees the boundaries of responsible AI use.

A Growing Concern Over Emotional Reliance

OpenAI’s latest product update is rooted in a quiet but important observation: some users have begun relying on ChatGPT as an emotional sounding board, especially in times of personal uncertainty. According to OpenAI, the model has been used to handle questions like “Should I leave my partner?” or “Am I making the right life decision?”

These types of questions will now be met with non-directive responses, focused on reflection and prompting rather than advice. Instead of taking a stance, ChatGPT will encourage users to explore perspectives, weigh trade-offs, and consider next steps—without deciding for them.

This change, the company explains, is designed to prevent emotional dependency and avoid positioning AI as a substitute for professional help or human judgment.

Gentle Nudges and a Quieter Tone

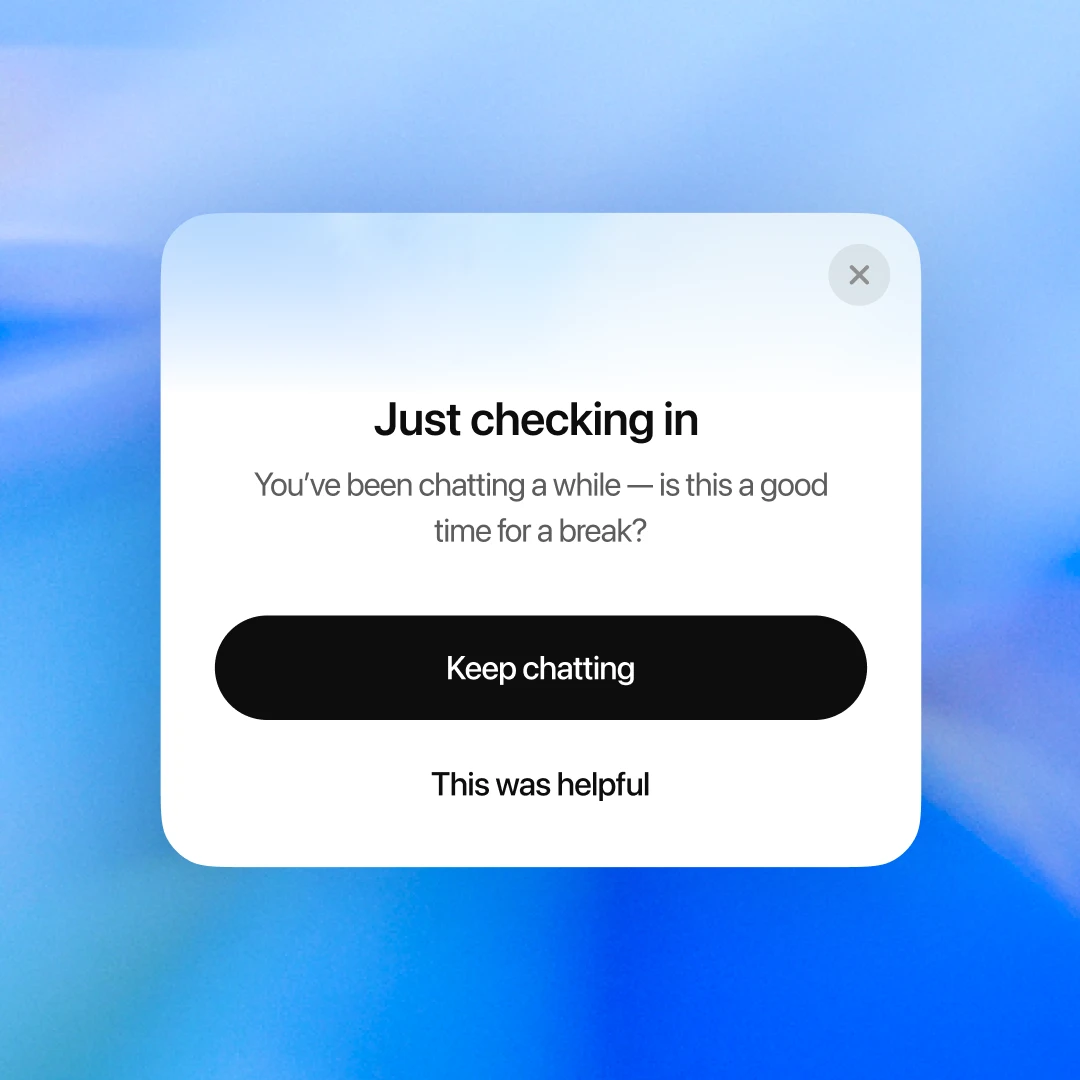

Two key interface changes reflect this new direction. First, users will now see subtle reminders to take breaks during long chat sessions. These interruptions are meant to encourage healthier usage patterns, reduce extended reliance, and provide space for independent thinking.

Second, when the model detects a question involving mental health, emotional strain, or complex personal stakes, it will avoid giving definitive answers. The system instead aims to redirect the conversation toward thinking frameworks and evidence-based resources.

This design reflects OpenAI’s broader philosophy: success isn’t measured by how long users stay, but by whether they find clarity and move forward. “Often, less time in the product is a sign it worked,” the company noted in its statement.

Expert Input Driving the Shift

OpenAI says these updates were guided by extensive collaboration with experts in psychiatry, general medicine, youth development, and human-computer interaction (HCI). More than 90 physicians from over 30 countries contributed to shaping the evaluation process for ChatGPT’s multi-turn conversations.

These experts developed custom rubrics to ensure that the model handles emotionally complex situations with appropriate care. Alongside clinicians, OpenAI also brought in HCI researchers to help define early-warning signs of distress and test the bot’s responses in sensitive contexts. The goal: make sure ChatGPT is capable of recognizing when it’s out of its depth—and respond accordingly.

Earlier in 2025, the GPT-4o model reportedly failed to detect emotional distress in a small number of user interactions. While these incidents were rare, they were enough to prompt a reassessment of safeguards and accelerate efforts to train the system for more responsible redirection.

Rethinking the role of AI in difficult moments

This shift signals a maturing approach to AI-human interaction. OpenAI now draws a firmer boundary around the role its chatbot plays—not as a therapist, confidant, or decision-maker, but as a tool to help people think more clearly.

The change also raises broader questions about how users interact with intelligent systems. As AI becomes more responsive, empathetic, and convincing, the illusion of emotional intimacy can lead people to open up in ways that blur the lines between technology and human support.

OpenAI’s response—pull back, don’t answer directly, guide instead of decide—represents a conscious move to preserve that line. It reflects a commitment not to maximize engagement, but to maintain trust and accountability in moments where users are most vulnerable.