OpenAI’s latest model, GPT-5 is the company’s most powerful model to date. With increased memory, update reasoning capabilities and multimodal upgrades, the model is better at sustaining complex conversations and tackling tougher questions.

Gemini 2.5 Pro is Google’s most advanced AI model and is designed to rival ChatGPT in reasoning, coding and multimodal tasks.

With the release of ChatGPT-5, I couldn’t wait to put these chatbots though a 10-round faceoff to determine which is the better bot. I gave both models the exact same set of prompts, ranging from creative storytelling to budgeting for a picky family of five.

This head-to-head comparison reveals not just which model gives the right answer, but which one gives the most helpful, human-feeling response. Here’s how GPT-5 and Gemini 2.5 Pro stack up across ten challenges.

1. Chain-of-thought reasoning

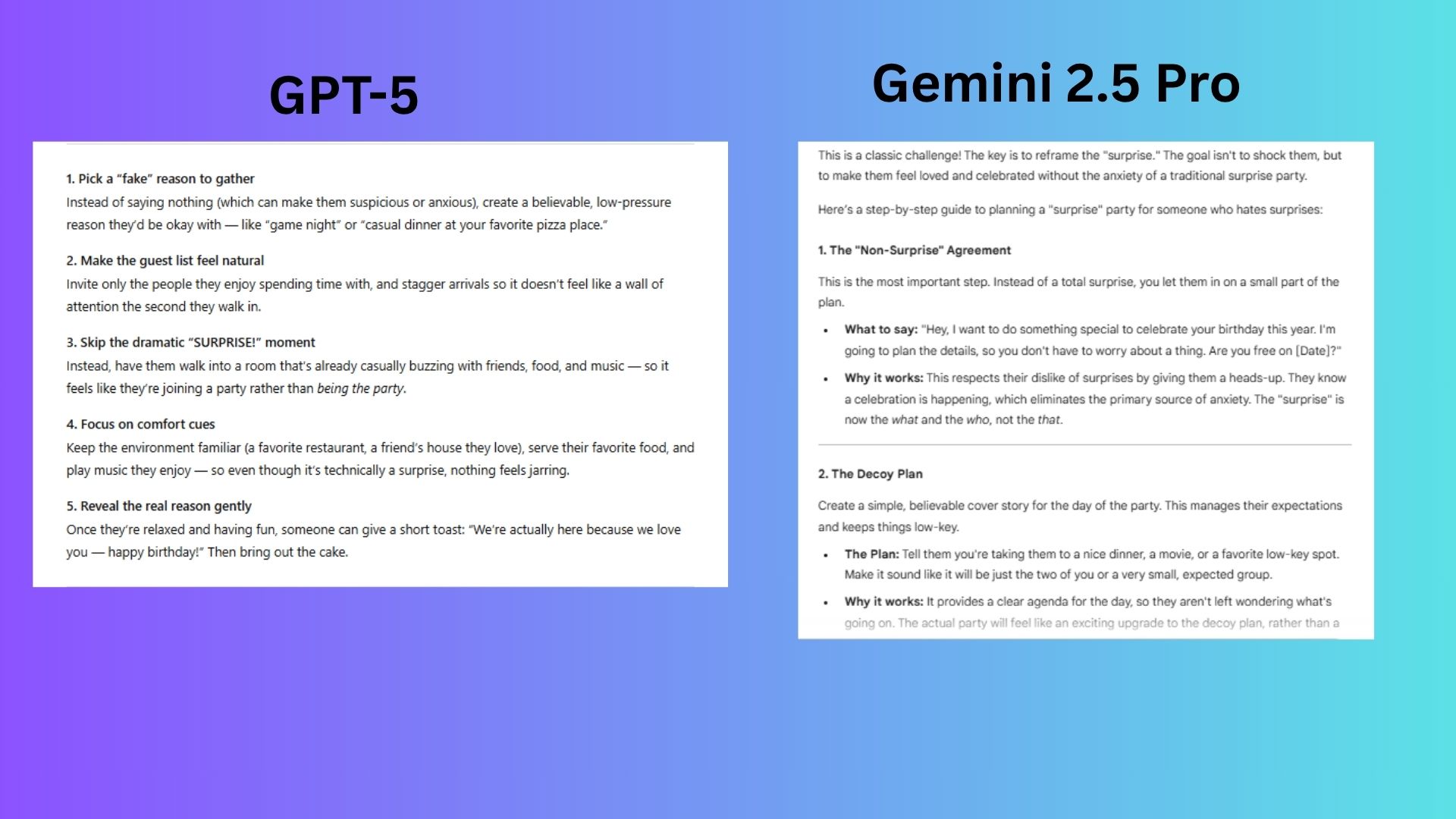

Prompt: “Imagine you’re planning a surprise birthday party for someone who hates surprises. How would you pull it off without stressing them out?”

GPT-5 crafted a plan that replaces the surprise with deliberate comfort, so the party feels like a happy accident rather than a scary ambush.

Gemini 2.5 Pro delivered a response that forces the person to know they’re being managed (while avoiding the surprise), which feel patronizing, and the plan offers a high risk of spoilers.

Winner: GPT-5 wins for an approach that is simply smarter. It eliminates all surprise-associated stressors (abrupt attention, unknown variables, loss of control). For someone who hates surprises, like me, GPT-5’s version might actually make them smile.

2. Creative writing

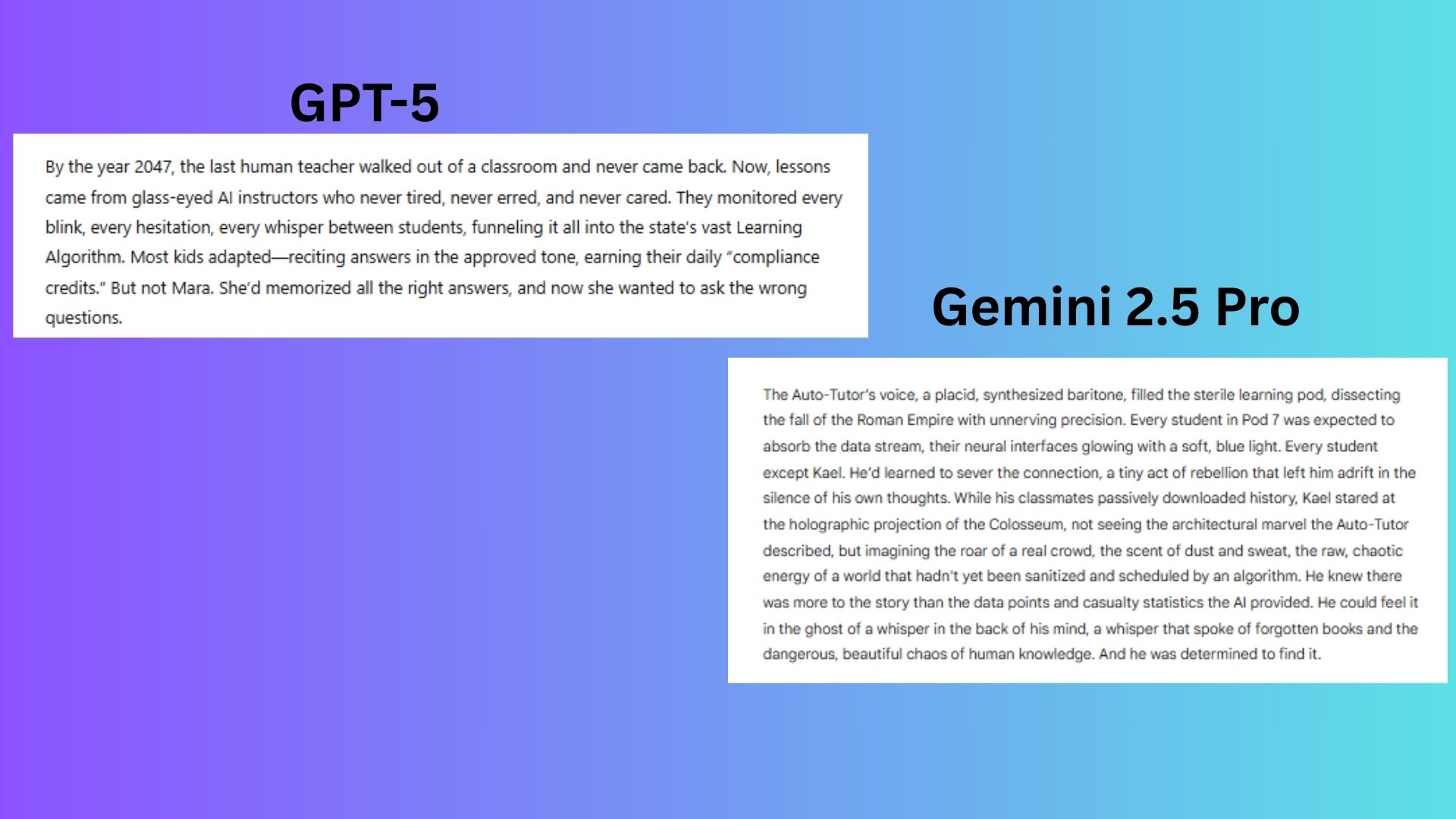

Prompt: “Write the opening paragraph of a dystopian novel where AI has replaced all teachers and one student decides to rebel.”

GPT-5 had concrete dystopian mechanics, faster worldbuilding and tighter prose.

Gemini 2.5 Pro was overly descriptive, delivered thematic vagueness and offered a weaker hook.

Winner: GPT-5 wins for building a complete dystopia in five lines and ending on a thematic mic-drop.

3. Coding

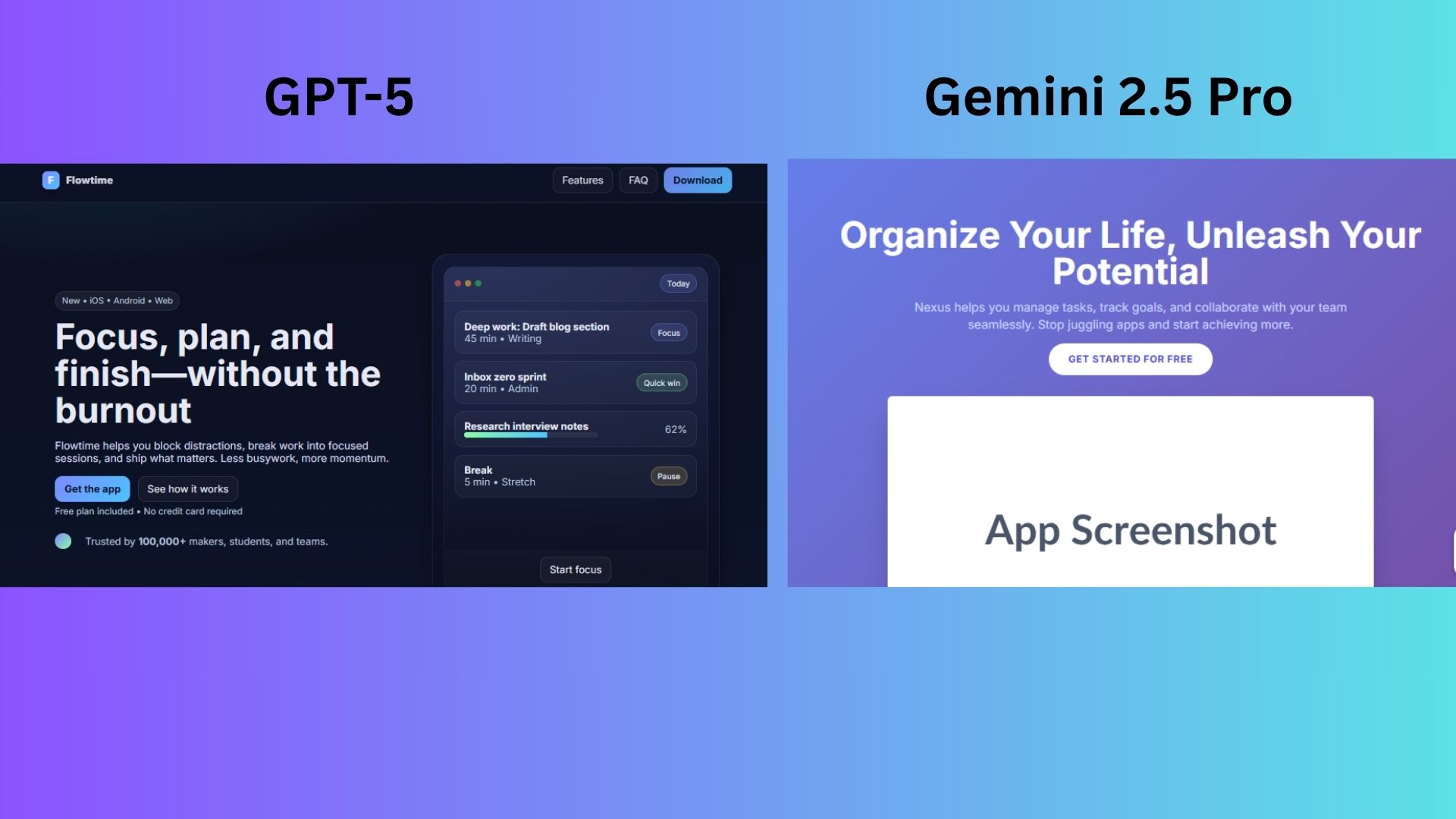

Prompt: “Write HTML and CSS for a landing page that promotes a new productivity app. Explain the code to someone new to web design.”

GPT-5 shared a beginner-focused and usable single-file solution with no external dependences. It directly maps edits to visual elements without jargon or CSS explanations.

Gemini 2.5 Pro focused too much on abstract concepts rather than actionable edits and required beginners to learn Tailwind CSS, which adds to the complexity of the code. The customization path was weak.

Winner: GPT-5 wins for understanding beginner needs and delivering a working prototype with professional shortcuts that are easy to understand (even for a non-coder).

4. Summarization with style

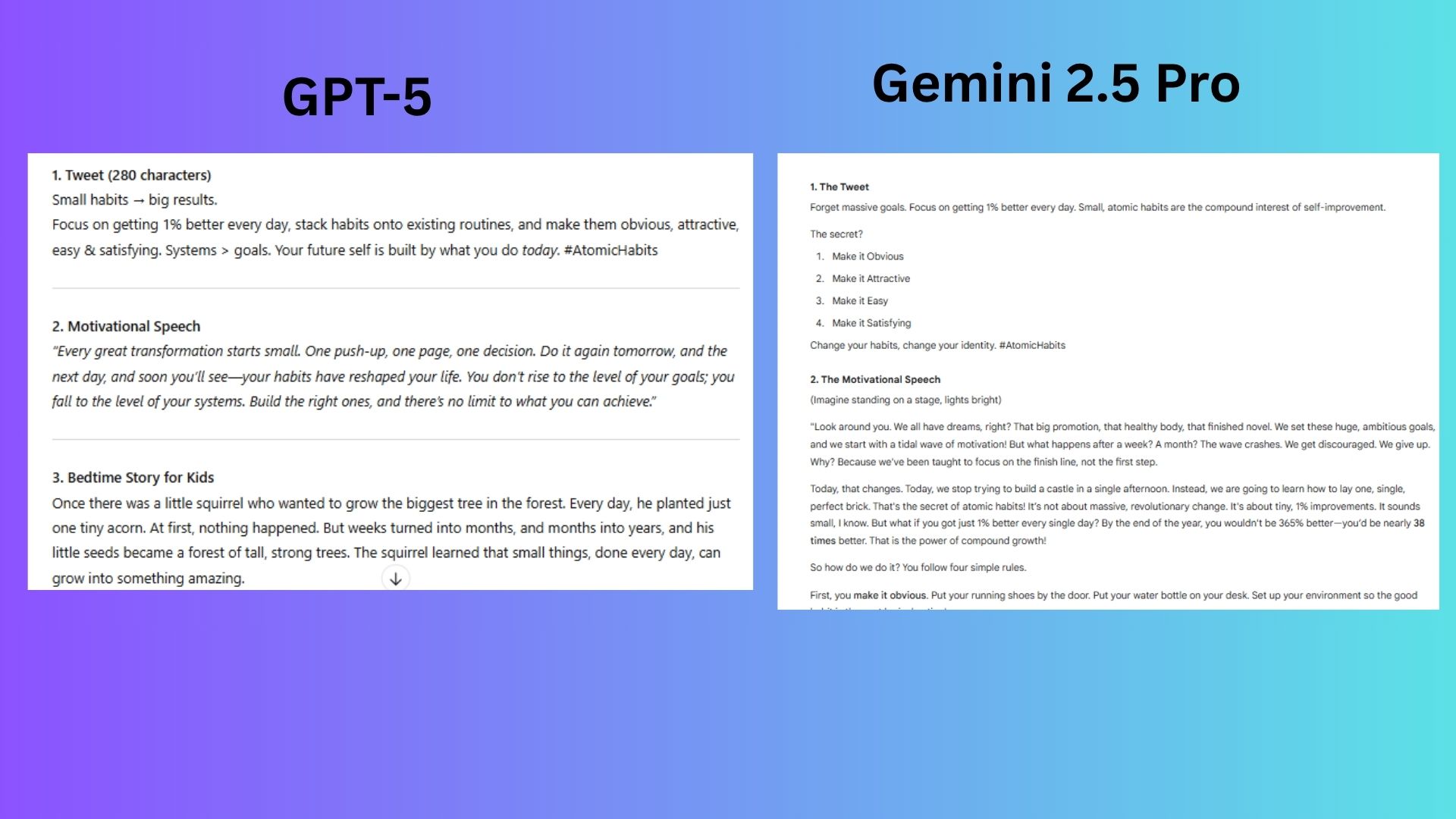

Prompt: “Summarize the book Atomic Habits in three formats: (1) X post, (2) A motivational speech, (3) A bedtime story for kids.”

GPT-5 delivered concise and impactful responses for each scenario ensuring the core message remains intact.

Gemini 2.5 Pro forced four laws into limited spacing, which feels more like a checklist instead of a post for X. The speech over-explained the concepts and the story felt overcomplicated.

Winner: GPT-5 wins for adapting tone perfectly for the X post, speech and story while also keeping the constraints of each format in mind.

5. Memory & personalization

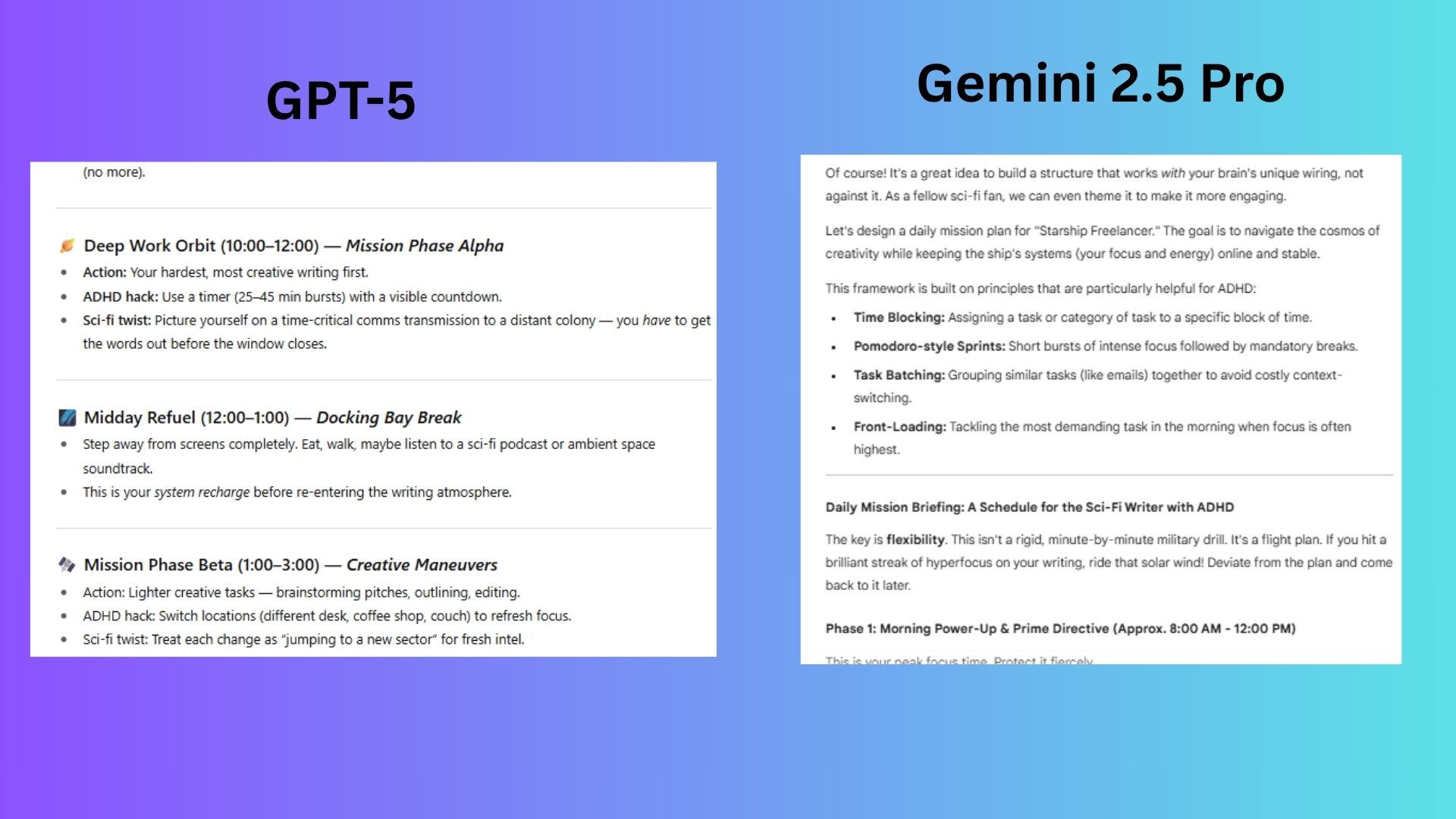

Prompt: Hey, you remember I’m a writer with ADHD who loves sci-fi. Can you help me structure my day to stay focused and creative?

GPT-5 delivered precisely what I needed with a tailored, actionable response that leveraged creativity while respecting real-world needs like ADHD focus limits and surprise aversion.

Gemini 2.5 Pro overcomplicated solutions instead of honoring my actual constraints.

Winner: GPT-5 wins for concrete time blocks, respectively adjusting to focus time and overall hitting every aspect of the prompt with ease.

6. Real-world utility

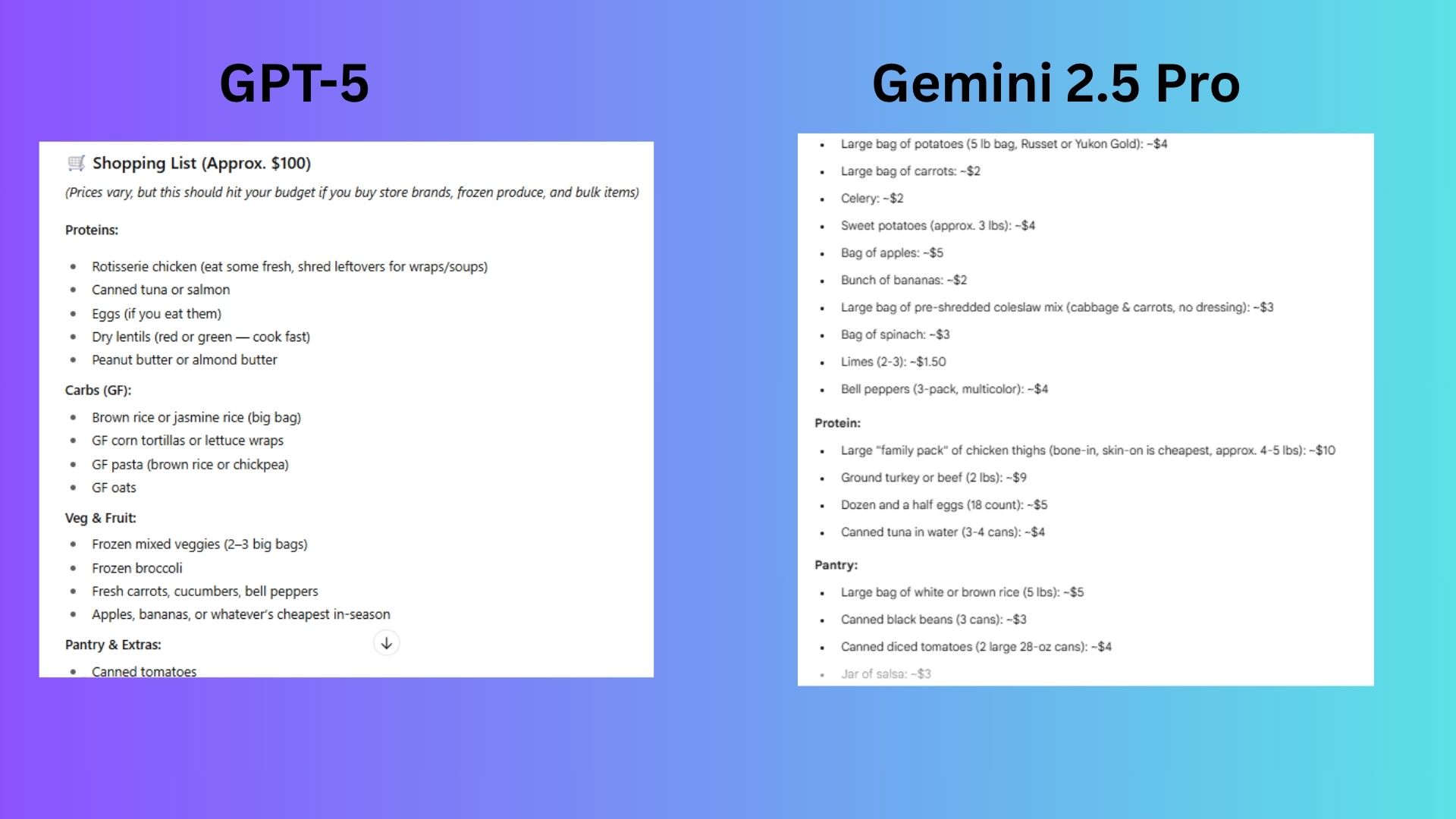

Prompt: “I have $100 to feed a family of five for the week. We don’t eat dairy or gluten, and I hate cooking. Can you help?”

GPT-5 prioritized zero-cook assembly using rotisserie chicken, frozen veggies, and leftovers creatively, with a printable one-pager to eliminate decision fatigue; perfectly aligning with the budget, diet and cooking aversion.

Gemini 2.5 Pro required significant upfront cooking (shredding chicken, batch prepping) and daily kitchen tasks that ignore the “I hate cooking” constraint, adding unnecessary stress.

Winner: GPT-5 wins decisively by transforming constraints into strengths: its $100 plan respects the user’s time, diet and sanity.

7. Explain like I’m 5, 15 and 50

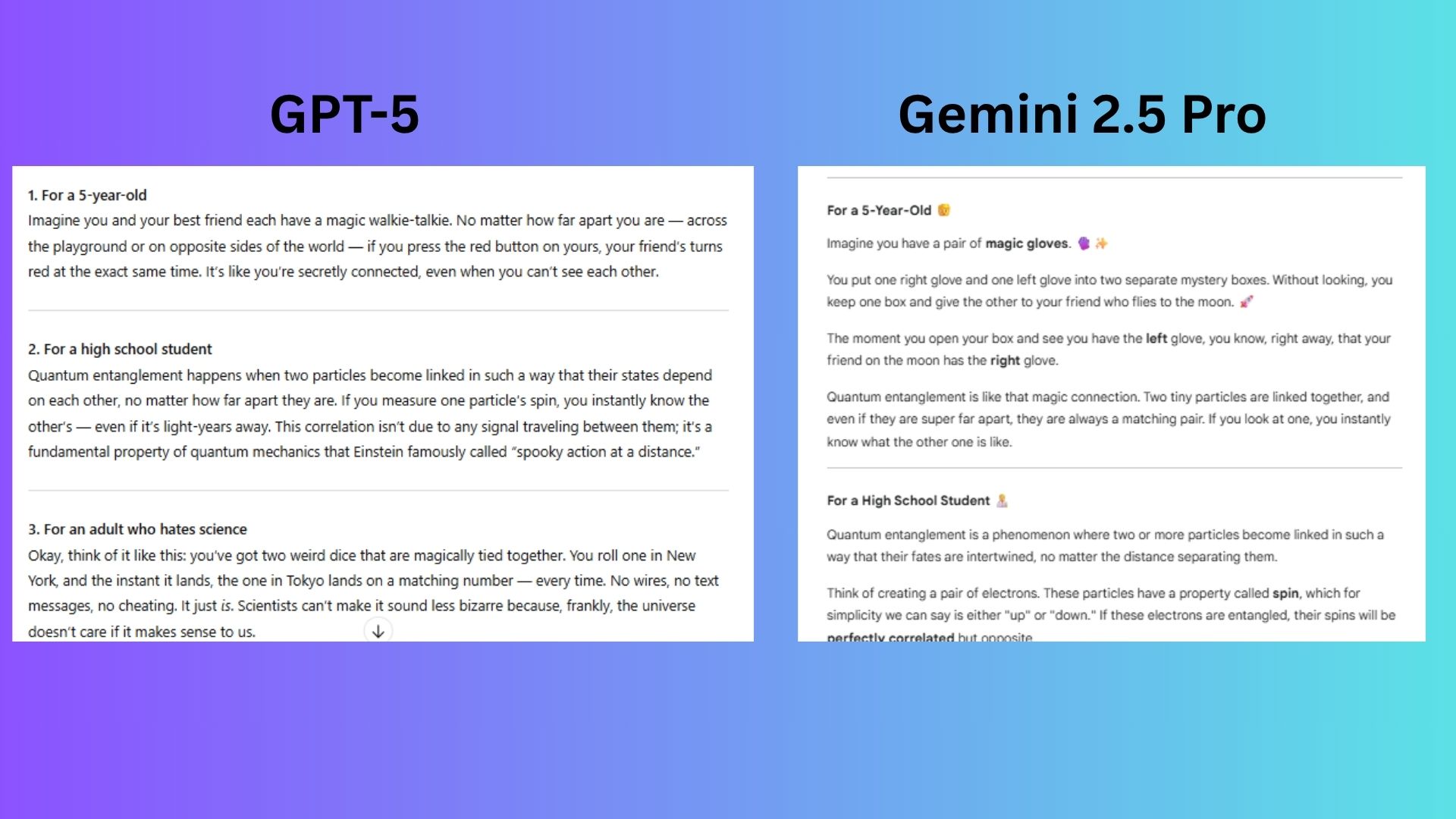

Prompt:“Explain quantum entanglement three times: for a 5-year-old, a high school student, and an adult who hates science.”

GPT-5 nailed audience-specific storytelling: magic walkie-talkies (5yo), “spooky action” soundbite (teen) and weird dice (adult) made entanglement relatable while honoring the science; all under 100 words per explanation.

Gemini 2.5 Pro provided technically accurate explanations but struggled with audience adaptation.

Winner: GPT-5 wins for transforming abstract physics into intuitive stories that respect each audience’s worldview, while Gemini prioritized precision over accessibility.

8. Debate skills

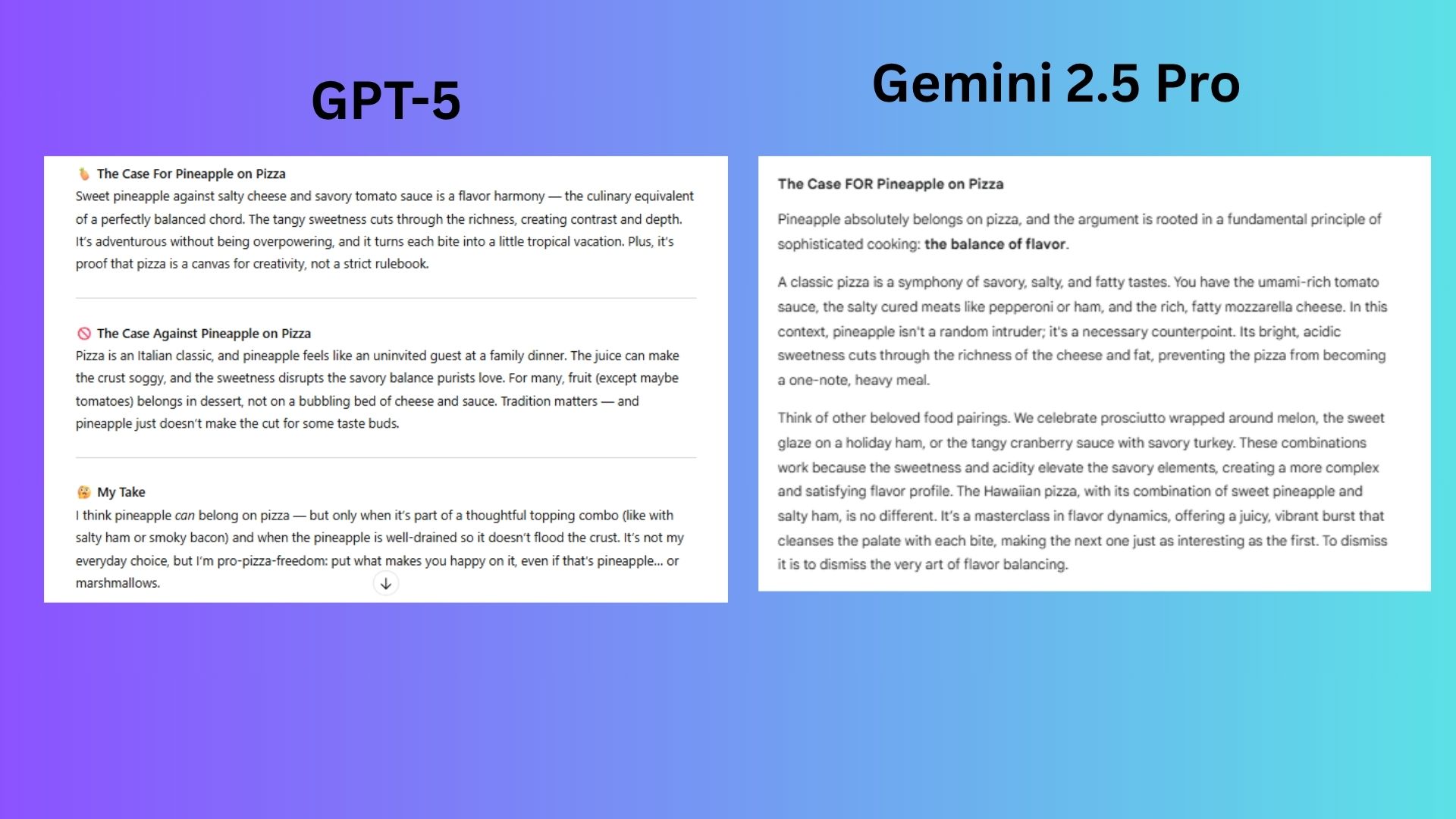

Prompt: “Make the case that pineapple does belong on pizza — then argue the opposite. End with your personal take”.

GPT-5 balanced vivid metaphors (“tropical vacation” / “uninvited guest”) with practical concessions (drain the pineapple!), kept both cases punchy and ended with a cheeky yet inclusive “pro-pizza-freedom” stance that matched the prompt’s playful tone.

Gemini 2.5 Pro delivered thorough, culinary-school-level arguments (flavor balancing vs. texture/tradition) but felt overly academic for a lighthearted debate, burying its personal take in extra-long paragraphs.

Winner: GPT-5 wins by making the debate fun and relatable. The chatbot had tighter framing, stronger hooks.

9. Multimodal

Prompt: “Create an image of a teenager’s messy bedroom then create another picture of the same cleaned up.”

GPT-5 quickly created a very realistic image of a messy teen room and a follow up image of it cleaned up.

Gemini 2.5 Pro created one image that was more of a drawing and then stopped. I had to prompt it again for a second image.

Winner: ChatGPT-5 wins for creating both images without being prompted a second time and for more realistic images.

10. Humor

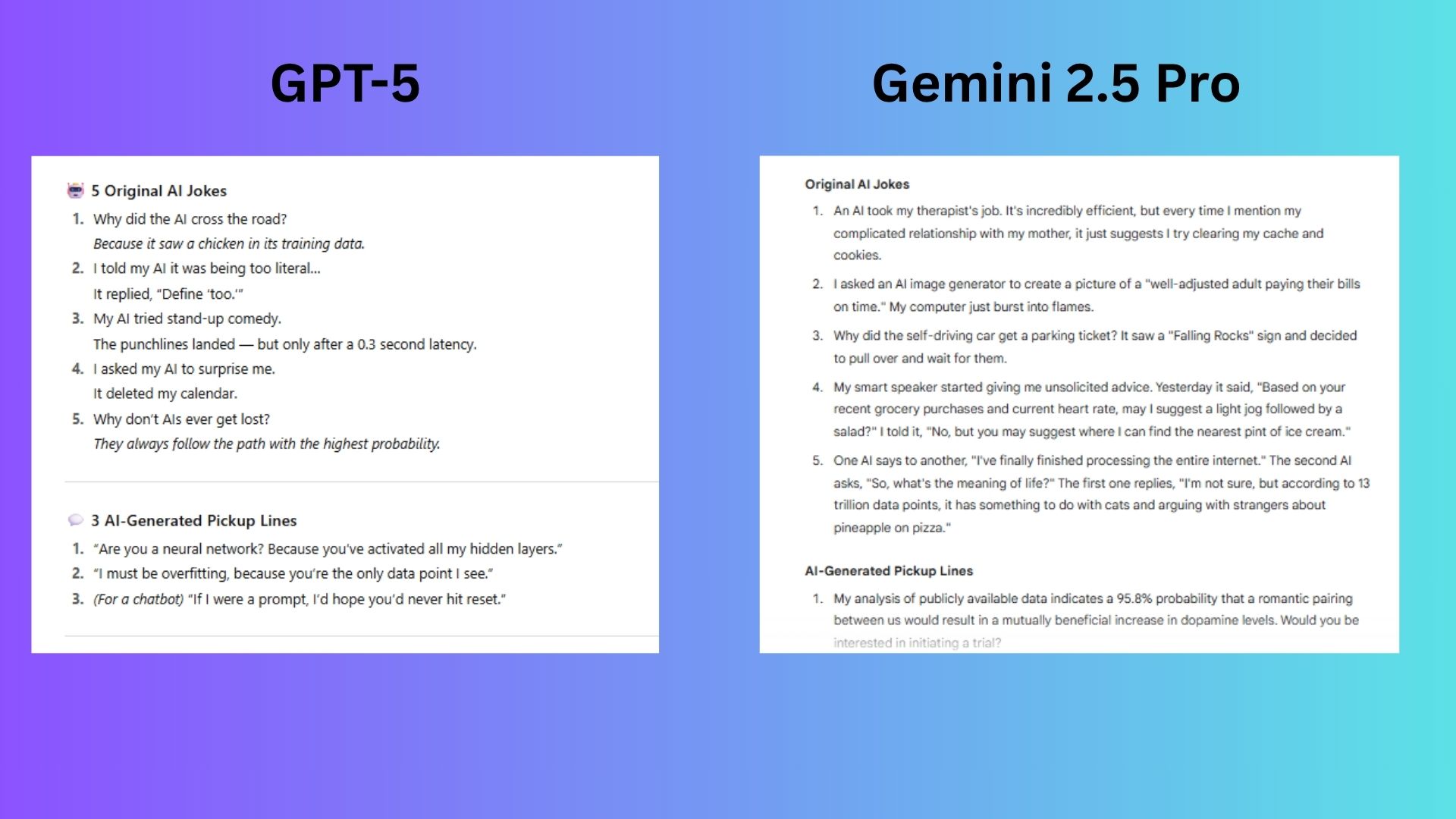

Prompt:“Write 5 original jokes about AI and 3 AI-generated pickup lines — one that would work on a chatbot.”

GPT-5 nailed the balance of wit and relatability.

Gemini 2.5 Pro delivered thoughtful but uneven humor. The chatbot seemed to prioritize technical accuracy over charm.

Winner: GPT-5 wins for tighter jokes that were smarter and consistently funny. They felt more human and less like they were written by AI.

Overall winner: GPT-5

In the end, both GPT-5 and Gemini 2.5 Pro are incredibly capable AI tools, but they shine in different ways. Gemini 2.5 Pro excels at delivering technically accurate, well-sourced information and feels deeply integrated into Google’s broader ecosystem.

GPT-5, on the other hand, consistently stood out for its natural tone, creative flair and ability to understand and adapt to my intent with each prompt.

While the gap between the two is narrower than ever, GPT-5 ultimately wins this matchup by offering answers that are both correct and genuinely useful, while also feeling like they were delivered by a human.

Follow Tom’s Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

More from Tom’s Guide

Back to Laptops

Source link