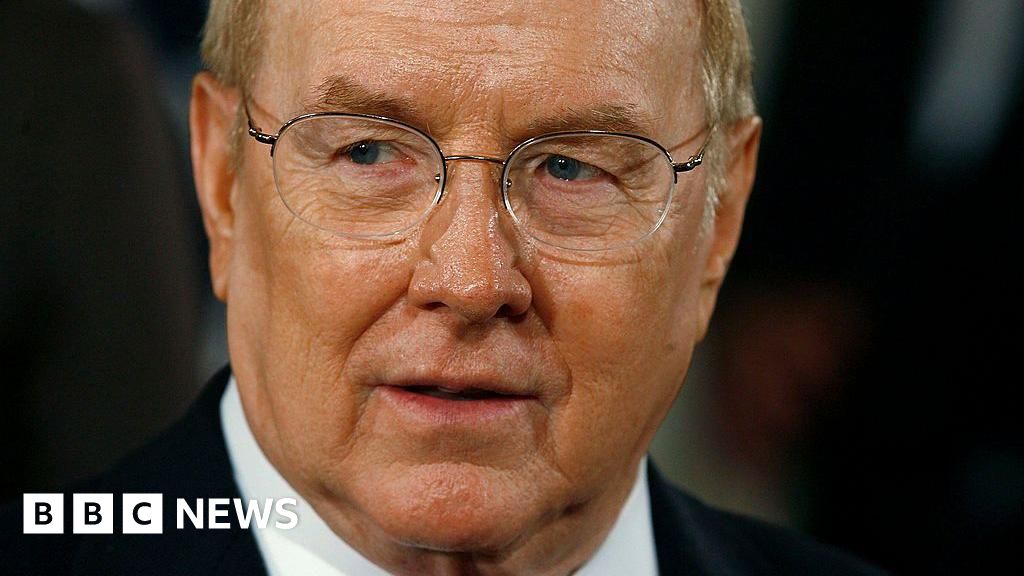

Microsoft AI’s CEO Mustafa Suleyman is clear: AI is not human and does not possess a truly human consciousness. But the warp-speed advancement of generative AI is making that harder and harder to recognize. The consequences are potentially disastrous, he wrote Tuesday in an essay on his personal blog.

Suleyman’s 4,600-word treatise is a timely reaction to a growing phenomenon of AI users ascribing human-like qualities of consciousness to AI tools. It’s not an unreasonable reaction; it’s human nature for us to imagine there is a mind or human behind language, as one AI expert and linguist explained to me. But advancements in AI capabilities have allowed people to use chatbots not only as search engines or research tools, but as therapists, friends and romantic partners. These AI companions are a kind of “seemingly conscious AI,” a term Suleyman uses to define AI that can convince you it’s “a new kind of ‘person.'” With that come a lot of questions and potential dangers.

Suleyman takes care at the beginning of the essay to highlight that these are his personal thoughts, meaning they aren’t an official position of Microsoft, and that his opinions could evolve over time. But getting insight from one of the leaders of a tech giant leading the AI revolution is a window into the future of these tools and how our relationship to them might change. He warns that while AI isn’t human, the societal impacts of the technology are immediate and pressing.

Watch this: How You Talk to ChatGPT Matters. Here’s Why

Dangers of ‘seemingly conscious AI’

Human consciousness is hard to define. But many of the traits Suleyman describes in defining consciousness can be seen in AI technology: the ability to express oneself in natural language, personality, memory, goal setting and planning, for example. This is something we can see with the rise of agentic AI in particular: If an AI can independently plan and complete a task by pulling from its memory and datasets, and then express its results in an easy-to-read, fun way, that feels like a very human-like process even though it isn’t. And if something feels human, we are generally inclined to give it some autonomy and rights.

Suleyman wants us and AI companies to nip this idea in the bud now. The idea of “model welfare” could “exacerbate delusions, create yet more dependence-related problems, prey on our psychological vulnerabilities, introduce new dimensions of polarization, complicate existing struggles for rights and create a huge new category error for society,” he writes.

There is a heartbreakingly large number of examples to point to of the devastating consequences. Many stories and lawsuits have emerged of chatbot “therapists” dispensing bad and dangerous advice, including encouraging self-harm and suicide. The risks are especially potent for children and teenagers. Meta’s AI guidelines recently came under fire for allowing “sensual” chats with kids, and Character.Ai has been the target of much concern and a lawsuit from a Florida mom alleging the platform is responsible for her teen’s suicide. We’ve also learning more about how our brains work when we’re using AI and how often people are using it.

Read more: AI Data Centers Are Coming for Your Land, Water and Power

Suleyman argues we should protect the well-being and rights of existing humans today, along with animals and the environment. In what he calls “a world already roiling with polarized arguments over identity and rights,” debate over seemingly conscious AI and AI’s potential humanity “will add a chaotic new axis of division” in society.

In terms of practical next steps, Suleyman advocates additional research into how people interact with AI. He also calls on AI companies to explicitly say that their AI products are not conscious and to not encourage people to think that they are, along with more open sharing of the design principles and guardrails that are effective at deterring problematic AI use cases. He says that his team at Microsoft will be building AI in this proactive way, but doesn’t provide any specifics.